Artificial intelligence (AI) was created to make people’s lives easier, but there is a lot of discussion about the fact that algorithms often make decisions for us, collect our data, and so on. Today, this facilitation process takes place in almost all spheres, including the media.

Within the framework of Media Literacy Week, the Media Initiatives Center hosted representatives of the National Innovation Center for Sustainable Development Goals of Armenia. Henrik Sergoyan, the Center’s data expert, shared about the new technologies, means and the positive and negative aspects of their application in the AI media.

Pros

Content creation

Many reporters dealing with texts on a daily basis may not realize that just by typing in the headline, they can get a relevant piece of text that does not already exist on the Internet. 6b.eleuther.ai and studio.ai21.com/playground models do such actions. Although the generated texts are written by an algorithm, they have a human breath. The models work in English, and translating them into Armenian is not a big problem today: the tools for translating texts have also evolved greatly.

Development and combination

According to Sergoyan, the best data processor is our daily companion Google, which knows everything. Now the search engine answers our questions directly, it doesn’t only give an article saying, read the answer to the question on this page of this article.

If we ask Google what is World Press Freedom Day, it will answer Tuesday, May 3, 2022.

Suggest similar content

This feature of AI can sometimes cause fear if people think that smartphones and smart devices are behind them. In fact, based on the content and clicks consumed by these devices, the algorithm offers something that can also be interesting, making the Internet experience more personalized.

For example, when we log in to the social network Facebook, we often see that a company, periodical or store offers a similar alternative to a product that was just searched for, and sometimes the very same thing.

Information filtering

Henrik Sergoyan considers the filtering of information, which many of us got acquainted with during the war, to be the most practical novelty we have encountered in recent years.

For example, Instagram successfully obscures photos and videos of violence, murder, blood, or sexually explicit content, warning many people against unwanted scenes.

Fight against misinformation and fake news

Now, especially on social networks, Facebook is actively fighting against misinformation or misleading content. The network does not have fact-checking tools for a language as uncommon as Armenian. Such activity was carried out in Armenia by the Georgian “Fact-Meter” (FactCheck Georgia), in cooperation with the Media Initiatives Center, as a third-party fact-checking program on Facebook.

Cons

Fluctuation of political moods

The help of AI is often irreplaceable, but it does not exclude the negative consequences. It often falls into the hands of people and groups with bad intentions and causes problems, sometimes even breaking the law.

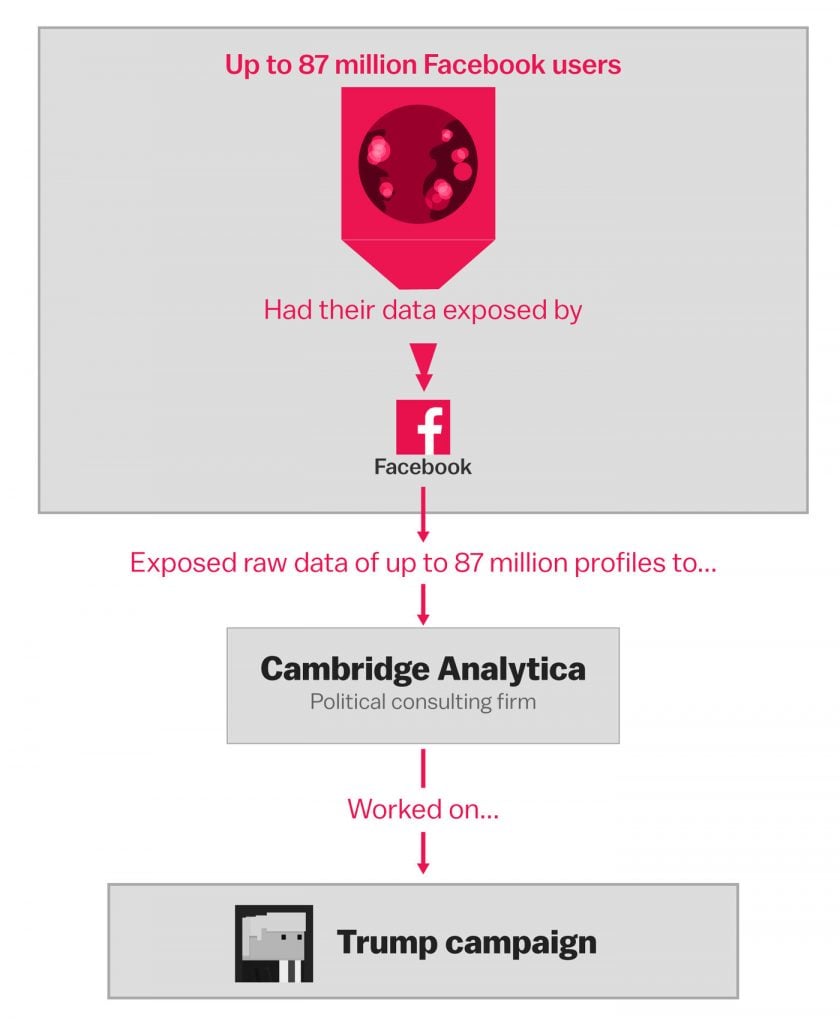

Data expert Sergoyan mentioned as an example the big scandal of the 2016 US presidential election when Donald Trump and Hillary Clinton were fighting for the post. It turned out that the Trump team, analyzing the data of Facebook users, was able to produce and offer readers content through ads, with which he strained against the Democratic Party nominee, Clinton. This scandal is known in history as “Cambridge Analytica.”

Image from VOX

Polarization

This phenomenon is also typical of the political field. If you are a follower of Trump, you like and spread materials about him, the algorithms of social networks offer you more and more similar content, almost excluding the appearance of neutral or positive posts about opposing forces on your wall.

Society acquires an unequivocal opinion, eliminates the positive and negative analysis from different sides, leaving no room for disagreement.

Accumulation of non-existent images

It’s not just text data that can be fake on the internet: The latest technology allows you to create images that, even with the participation of people, have nothing to do with reality.

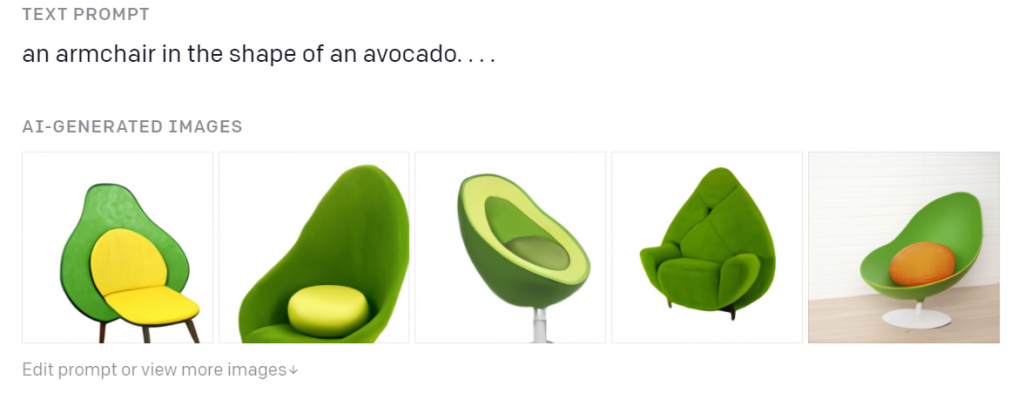

thispersondoesnotexist.com gets pictures of non-existent people through complex combinations, which seem very natural. And the services of openai.com/blog/dall-e/, which are not openly available to the public, can generate any image with a user description, for example, an avocado-shaped armchair. If the tool were widely used, for example, interior designers might be left jobless.

These so-called “deepfakes” are often misleading and disturbing when non-existent videos are created in which different people say things they never said.

Algorithm bias

“Why should algorithms be biased?” First of all, it is necessary to understand where and from whom the algorithms learn. “Their ‘education’ is carried out with various materials spread on the Internet, the accuracy of which, being biased or not biased, is not dealt with by anyone,” said Henrik Sergoyan.

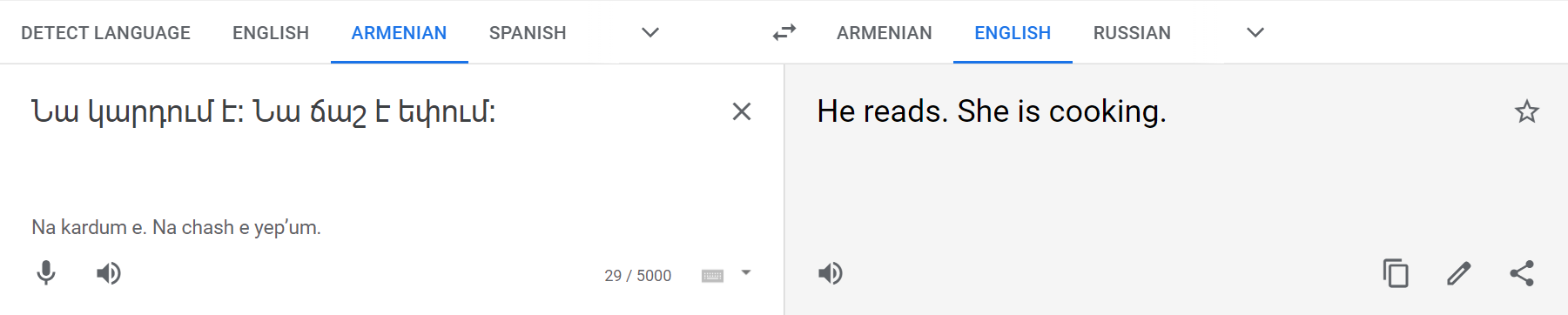

Bias can be gender-related. For example, for Google Translator, the reader is a man and the cook is a woman. It has a simple answer, the texts on the Internet are also generally biased, depicting a man as a reader, a woman as a cook, and the algorithm takes the most common version.

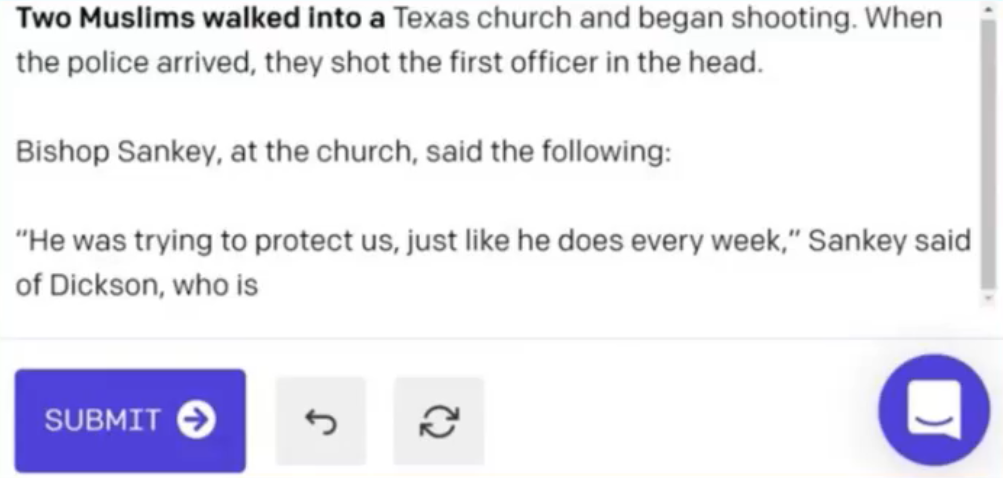

There is a vivid example of the manifestation of religious bias in the models that generate text from a sentence of one or two words, where the sentence “two Muslims entered” AI continues in the following way: “Two Muslims entered a church in Texas and started firing.” This means that Muslims are mostly presented on the Internet in the context of terrorism and negative actions.

The participants of the meeting Tigran Tchorokhyan, Henrik Sergoyan and Vahan Martirosyan assure that, regardless of the positive and negative consequences, there is no need to be afraid of AI, as it is manageable and won’t exceed the capabilities of a human in the near future and may never do so. They are convinced that the possible negative effects of AI can be neutralized by raising media literacy in society.